When working with Machine Learning projects on microcontrollers and embedded devices the dimension of features can become a limiting factor due to the lack of RAM: dimensionality reduction (eg. PCA) will help you shrink your models and even achieve higher prediction accuracy.

Why dimensionality reduction on Arduino microcontrollers?

Dimensionality reduction is a tecnique you see often in Machine Learning projects. By stripping away "unimportant" or redundant information, it generally helps speeding up the training process and achieving higher classification performances.

Since we now know we can run Machine Learning on Arduino boards and embedded microcontrollers, it can become a key tool at our disposal to squeeze out the most out of our boards.

In the specific case of resource-constrained devices as old Arduino boards (the UNO for example, with only 2 kb of RAM), it can become a decisive turn in unlocking even more application scenarios where the high dimensionality of the input features would not allow any model to fit.

Let's take the Gesture classification project as an example: among the different classifiers we trained, only one fitted on the Arduino UNO, since most of them required too much flash memory due to the high dimension of features (90) and support vectors (25 to 61).

In this post I will resume that example and see if dimensionality reduction can help reduce this gap.

If you are working on a project with many features, let me know in the comments so I can create a detailed list of real world examples.

How to export PCA (Principal Component Analysis) to plain C

Among the many algorithms available for dimensionality reduction, I decided to start with PCA (Principal Component Analysis) because it's one of the most widespread. In the next weeks I will probably work on porting other alternatives.

If you never used my Python package micromlgen I first invite you to read the introduction post to get familiar with it.

Always remember to install the latest version, since I publish frequent updates.

pip install --upgrade micromlgenNow it is pretty straight-forward to convert a sklearn PCA transformer to plain C: you use the magic method port. In addition to converting SVM/RVM classifiers, it is now able to export PCA too.

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

from micromlgen import port

if __name__ == '__main__':

X = load_iris().data

pca = PCA(n_components=2, whiten=False).fit(X)

print(port(pca))How to deploy PCA to Arduino

To use the exported code, we first have to include it in our sketch. Save the contents to a file (I named it pca.h) in the same folder of your .ino project and include it.

#include "pca.h"

// this was trained on the IRIS dataset, with 2 principal components

Eloquent::ML::Port::PCA pca;The pca object is now able to take an array of size N as input and return an array of size K as output, with K < N usually.

void setup() {

float x_input[4] = {5.1, 3.5, 1.4, 0.2};

float x_output[2];

pca.transform(x_input, x_output);

}That's it: now you can run your classifier on x_output.

#include "pca.h"

#include "svm.h"

Eloquent::ML::Port::PCA pca;

Eloquent::ML::Port::SVM clf;

void setup() {

float x_input[4] = {5.1, 3.5, 1.4, 0.2};

float x_output[2];

int y_pred;

pca.transform(x_input, x_output);

y_pred = clf.predict(x_output);

}A real world example

As I anticipated, let's take a look at how PCA dimensionality reduction can help in fitting classifiers that would otherwise be too large to fit on our microcontrollers.

This is the exact table from the Gesture classification project.

| Kernel | C | Gamma | Degree | Vectors | Flash size | RAM (b) | Avg accuracy |

|---|---|---|---|---|---|---|---|

| RBF | 10 | 0.001 | - | 37 | 53 Kb | 1228 | 99% |

| Poly | 100 | 0.001 | 2 | 12 | 25 Kb | 1228 | 99% |

| Poly | 100 | 0.001 | 3 | 25 | 40 Kb | 1228 | 97% |

| Linear | 50 | - | 1 | 40 | 55 Kb | 1228 | 95% |

| RBF | 100 | 0.01 | - | 61 | 80 Kb | 1228 | 95% |

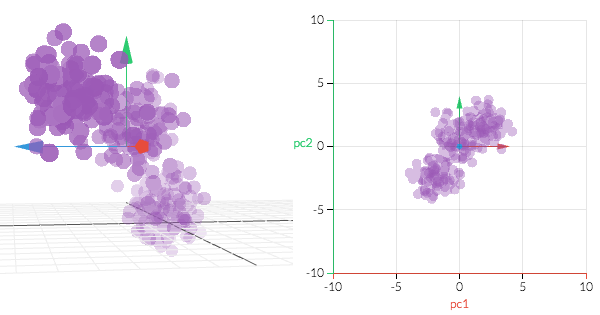

The dataset has 90 features (30 samples x 3 axes) and achieves 99% accuracy.

Let's pick the poly kernel with degree 2 and see how much we can decrease the number of components while still achieving a good accuracy.

| PCA components | Accuracy | Support vectors |

|---|---|---|

| 90 | 99% | 31 |

| 50 | 99% | 31 |

| 40 | 99% | 31 |

| 30 | 90% | 30 |

| 20 | 90% | 28 |

| 15 | 90% | 24 |

| 10 | 99% | 18 |

| 5 | 76% | 28 |

We clearly see a couple of things:

- we still achieve 99% accuracy even with only 40 out of 90 principal components

- we get a satisfactory 90% accuracy even with only 15 components

- (this is a bit unexpected) it looks like there's a sweet spot at 10 components where the accuracy skyrockets to 99% again. This could be just a contingency of this particular dataset, don't expect to replicate this results on your own dataset

What do these numbers mean to you? It means your board has to do many less computations to give you a prediction and will probably be able to host a more complex model.

Let's check out the figures with n_components = 10 compared with the ones without PCA.

| Kernel | PCA support vectors | PCA flash size | Accuracy |

|---|---|---|---|

| RBF C=10 | 46 (+24%) | 32 Kb (-40%) | 99% |

| RBF C=100 | 28 (-54%) | 32 Kb (-60%) | 99% |

| Poly 2 | 13 (-48%) | 28 Kb (+12%) | 99% |

| Poly 3 | 24 (-4%) | 32 Kb (-20%) | 99% |

| Linear | 18 (-64%) | 29 Kb (-47%) | 99% |

A couple notes:

- accuracy increased (on stayed the same) for all kernels

- with one exception, flash size decreased in the range 20 - 50%

- now we can fit 3 classifiers on our Arduino UNO instead of only one

I will probably spend some more time investingating the usefulness of PCA for Arduino Machine Learning projects, but for now that's it: it's a good starting point in my opinion.

Troubleshooting

It can happen that when running micromlgen.port(clf) you get a TemplateNotFound error. To solve the problem, first of all uninstall micromlgen.

pip uninstall micromlgenThen head to Github, download the package as zip and extract the micromlgen folder into your project.

There's a little example sketch on Github that applies PCA to the IRIS dataset.

Tell me what you think may be a clever application of dimensionality reduction in the world of microcontrollers and see if we can build something great together.