If you liked my post about ESP32 cam motion detection, you'll love this updated version: it's easier to use and blazing fast!

The post about pure video ESP32 cam motion detection without an external PIR is my most successful post at the moment. Many of you are interested about this topic.

One of my readers, though, pointed out my implementation was quite slow and he only achieved bare 5 fps in his project. So he asked for a better alternative.

Since the post was of great interest for many people, I took the time to revisit the code and make improvements.

I came up with a 100% re-writing that is both easier to use and faster. Actually, it is blazing fast!.

Let's see how it works.

Table of contents

Downsampling

In the original post I introduced the idea of downsampling the image from the camera for a faster and more robust motion detection. I wrote the code in the main sketch to keep it self-contained.

Looking back now it was a poor choice, since it cluttered the project and distracted from the main purpose, which is motion detection.

Moreover, I thought that scanning the image buffer in sequential order would be the fastest approach.

It turns out I was wrong.

This time I scan the image buffer following the blocks that will compose the resulting image and the results are... much faster.

Also, I decided to inject some more efficiency that will further speedup the computation: using different strategies for downsampling.

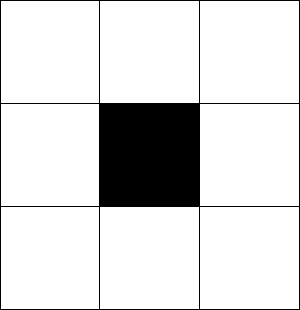

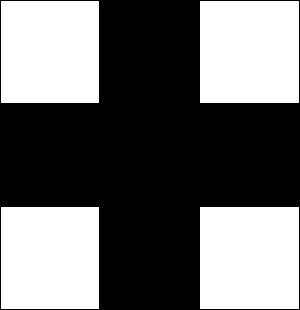

The idea of downsampling is that you have to "collapse" a block of NxN from the original image to just one pixel of the resulting image.

Now, there are a variety of ways you can accomplish this. The first two I present here are the most obvious, the other two are of my "invention": nothing fancy nor new, but they're fast and serve the purpose well.

Nearest neighbor

You can just pick the center of the NxN block and use its value for the output.

Of course it is fast (possibly the fastest approach), but wouldn't be very accurate. One pixel out of NxN wouldn't be representative of the overall region and will heavily suffer from noise.

Full block average

This is the most intuitive alternative: use the average of all the pixels in the block as the ouput value. This is arguabily the "proper" way to do it, since you're using all the pixels in the source image to compute the new one.

Core block average

As a faster alternative, I thought that averaging only the "core" (the most internal part) of the block would have been a good-enough solution. It has no theoretical proof that this yields true, but our task here is to create a smaller representation of the original image, not producing an accurate smaller version.

I'll stress this point: the only reason we do downsampling is to compare two sequential frame and detect if they differ above a certain threshold. This downsampling doesn't have to mimic the actual image: it can transform the source in any fancy way, as long as it stays consistent and captures the variations over time.

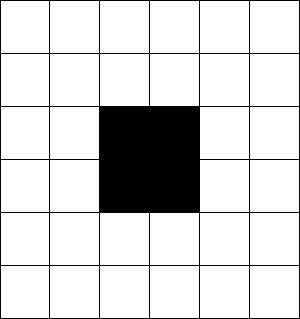

Cross block average

This time we consider all the pixels along the vertical and horizontal central axes. The idea is that you will capture a good portion of the variation along both the axis, given quite accurate results.

Diagonal block average

This alternative too came to my mind from nowhere, really. I just think it is a good alternative to capture all the block's variation, probably even better than vertical and horizontal directions.

Implement your own

Not satisfied from the methods above? No problem, you can still implement your own.

The ones presented above are just some algorithms that came to my mind: I'm not telling you they're the best.

They worked for me, that's it.

If you think you found a better solution, I encourage you implement it and even share it with me and the other readers, so we can all make progress on this together.

Benchmarks

So, at the very beginning I said this new implementation is blazingly fast.

How much fast?

As fast as it can be, arguably.

I mean, so fast it won't alter your fps.

Look at the results I got on my M5Stack camera.

| Algorithm | Time to execute (micros) | FPS |

|---|---|---|

| None | 0 | 25 |

| Nearest neighbor | 160 | 25 |

| Cross block | 700 | 25 |

| Core block | 800 | 25 |

| Diagonal block | 950 | 25 |

| Full block | 4900 | 12 |

As you can see, only the full block creates a delay in the process (quite a bit of delay even): the other methods won't slow down your program in any noticeable way.

If you test Nearest neighbor and it works for you, then you'll be extremely light on computation resources with only 160 microseconds of delay.

This is what I mean by blazing fast.

Motion detection

The motion detection part hasn't changed, so I point you to the original post to read more about the Block difference threshold and the Image difference threshold.

Full code

#define CAMERA_MODEL_M5STACK_WIDE

#include "EloquentVision.h"

#define FRAME_SIZE FRAMESIZE_QVGA

#define SOURCE_WIDTH 320

#define SOURCE_HEIGHT 240

#define BLOCK_SIZE 10

#define DEST_WIDTH (SOURCE_WIDTH / BLOCK_SIZE)

#define DEST_HEIGHT (SOURCE_HEIGHT / BLOCK_SIZE)

#define BLOCK_DIFF_THRESHOLD 0.2

#define IMAGE_DIFF_THRESHOLD 0.1

#define DEBUG 0

using namespace Eloquent::Vision;

ESP32Camera camera;

uint8_t prevFrame[DEST_WIDTH * DEST_HEIGHT] = { 0 };

uint8_t currentFrame[DEST_WIDTH * DEST_HEIGHT] = { 0 };

// function prototypes

bool motionDetect();

void updateFrame();

/**

*

*/

void setup() {

Serial.begin(115200);

camera.begin(FRAME_SIZE, PIXFORMAT_GRAYSCALE);

}

/**

*

*/

void loop() {

/**

* Algorithm:

* 1. grab frame

* 2. compare with previous to detect motion

* 3. update previous frame

*/

time_t start = millis();

camera_fb_t *frame = camera.capture();

downscaleImage(frame->buf, currentFrame, nearest, SOURCE_WIDTH, SOURCE_HEIGHT, BLOCK_SIZE);

if (motionDetect()) {

Serial.print("Motion detected @ ");

Serial.print(floor(1000.0f / (millis() - start)));

Serial.println(" FPS");

}

updateFrame();

}

/**

* Compute the number of different blocks

* If there are enough, then motion happened

*/

bool motionDetect() {

uint16_t changes = 0;

const uint16_t blocks = DEST_WIDTH * DEST_HEIGHT;

for (int y = 0; y < DEST_HEIGHT; y++) {

for (int x = 0; x < DEST_WIDTH; x++) {

float current = currentFrame[y * DEST_WIDTH + x];

float prev = prevFrame[y * DEST_WIDTH + x];

float delta = abs(current - prev) / prev;

if (delta >= BLOCK_DIFF_THRESHOLD)

changes += 1;

}

}

return (1.0 * changes / blocks) > IMAGE_DIFF_THRESHOLD;

}

/**

* Copy current frame to previous

*/

void updateFrame() {

memcpy(prevFrame, currentFrame, DEST_WIDTH * DEST_HEIGHT);

}Check the full project code on Github and remember to star!